Honda and innovation-backed Helm.ai have introduced Helm.ai Vision, a cutting-edge camera-based autonomous driving system. Built for integration with Honda’s upcoming 0 Series EV lineup, including an SUV and saloon launching in 2026, this system targets urban and highway driving scenarios. The broader context: to make electric vehicles smarter, safer, and more accessible, OEMs are adopting software-first autonomy. Helm.ai Vision delivers hands-free, eyes-off driving, reducing hardware costs while enhancing urban mobility in EVs.

Vision‑Only Approach for Cost‑Efficient EV Autonomy

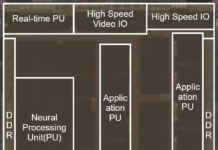

The heart of Helm.ai Vision is a camera-first approach, eliminating expensive sensors like radar and lidar. It fuses surround-view camera feeds into a 3D bird’s-eye map for real-time decision-making. It supports Level 2+ to Level 3 autonomy, using Deep Teaching™—an unsupervised learning pipeline that trains neural networks on large real-world datasets with minimal labeling. The result: EV equipped with this autonomy system can detect objects, navigate intersections, handle lane changes, and avoid obstacles more human-like and with scalable deployment.

Modular Integration Tailored for EV Production

Helm.ai Vision is hardware-agnostic and compatible with platforms like Nvidia and Qualcomm. It’s modular and OEM-friendly, enabling quick integration into EVs like Honda’s 0 Series SUV, built at the Ohio EV hub, and saloon. Honda pairs it with ASIMO OS—a centralized vehicle operating system—and plans an EV charging ecosystem of over 100,000 stations by 2030. Licensing this AI-first tech model offers EV manufacturers a cost-effective route to scalable autonomy.

In summary, Helm.ai Vision represents a leap forward in autonomous EV technology. As a vision-only, AI-driven system, it brings safer, hands-free driving to urban environments while slashing sensor costs. To be powered in Honda’s 0 Series EVs from 2026 onward, it also opens doors to multiple OEM collaborations. AI-based perception is propelling the EV revolution forward.